8. Linear Equations and Matrix Algebra

8.1Overview¶

Many problems in economics and finance require solving linear equations.

In this lecture we discuss linear equations and their applications.

To illustrate the importance of linear equations, we begin with a two good model of supply and demand.

The two good case is so simple that solutions can be calculated by hand.

But often we need to consider markets containing many goods.

In the multiple goods case we face large systems of linear equations, with many equations and unknowns.

To handle such systems we need two things:

- matrix algebra (and the knowledge of how to use it) plus

- computer code to apply matrix algebra to the problems of interest.

This lecture covers these steps.

We will use the following packages:

import numpy as np

import matplotlib.pyplot as plt8.2A two good example¶

In this section we discuss a simple two good example and solve it by

- pencil and paper

- matrix algebra

The second method is more general, as we will see.

8.2.1Pencil and paper methods¶

Suppose that we have two related goods, such as

- propane and ethanol, and

- rice and wheat, etc.

To keep things simple, we label them as good 0 and good 1.

The demand for each good depends on the price of both goods:

(We are assuming demand decreases when the price of either good goes up, but other cases are also possible.)

Let’s suppose that supply is given by

Equilibrium holds when supply equals demand ( and ).

This yields the linear system

We can solve this with pencil and paper to get

Inserting these results into either (1) or (2) yields the equilibrium quantities

8.2.2Looking forward¶

Pencil and paper methods are easy in the two good case.

But what if there are many goods?

For such problems we need matrix algebra.

Before solving problems with matrix algebra, let’s first recall the basics of vectors and matrices, in both theory and computation.

8.3Vectors ¶

A vector of length is just a sequence (or array, or tuple) of numbers, which we write as or .

We can write these sequences either horizontally or vertically.

But when we use matrix operations, our default assumption is that vectors are column vectors.

The set of all -vectors is denoted by .

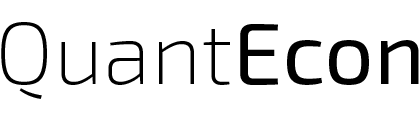

Often vectors are represented visually as arrows from the origin to the point.

Here’s a visualization.

Source

fig, ax = plt.subplots()

# Set the axes through the origin

for spine in ['left', 'bottom']:

ax.spines[spine].set_position('zero')

for spine in ['right', 'top']:

ax.spines[spine].set_color('none')

ax.set(xlim=(-5, 5), ylim=(-5, 5))

vecs = ((2, 4), (-3, 3), (-4, -3.5))

for v in vecs:

ax.annotate('', xy=v, xytext=(0, 0),

arrowprops=dict(facecolor='blue',

shrink=0,

alpha=0.7,

width=0.5))

ax.text(1.1 * v[0], 1.1 * v[1], str(v))

plt.show()

8.3.1Vector operations¶

Sometimes we want to modify vectors.

The two most common operators on vectors are addition and scalar multiplication, which we now describe.

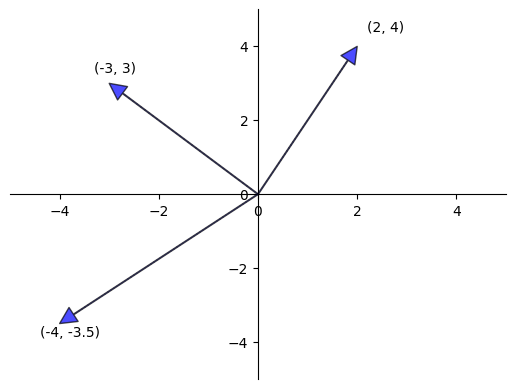

When we add two vectors, we add them element-by-element.

In general,

We can visualise vector addition in as follows.

Source

fig, ax = plt.subplots()

# Set the axes through the origin

for spine in ['left', 'bottom']:

ax.spines[spine].set_position('zero')

for spine in ['right', 'top']:

ax.spines[spine].set_color('none')

ax.set(xlim=(-2, 10), ylim=(-4, 4))

# ax.grid()

vecs = ((4, -2), (3, 3), (7, 1))

tags = ('(x1, x2)', '(y1, y2)', '(x1+x2, y1+y2)')

colors = ('blue', 'green', 'red')

for i, v in enumerate(vecs):

ax.annotate('', xy=v, xytext=(0, 0),

arrowprops=dict(color=colors[i],

shrink=0,

alpha=0.7,

width=0.5,

headwidth=8,

headlength=15))

ax.text(v[0] + 0.2, v[1] + 0.1, tags[i])

for i, v in enumerate(vecs):

ax.annotate('', xy=(7, 1), xytext=v,

arrowprops=dict(color='gray',

shrink=0,

alpha=0.3,

width=0.5,

headwidth=5,

headlength=20))

plt.show()

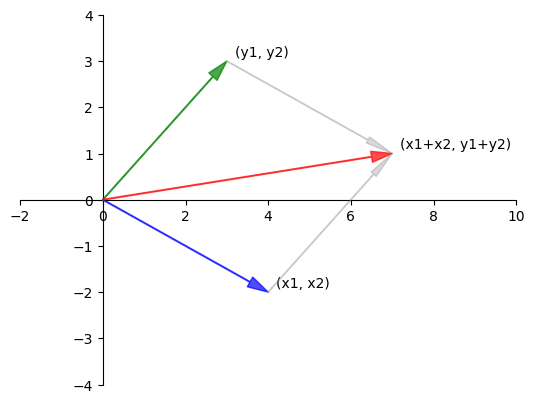

Scalar multiplication is an operation that multiplies a vector with a scalar elementwise.

More generally, it takes a number γ and a vector and produces

Scalar multiplication is illustrated in the next figure.

Source

fig, ax = plt.subplots()

# Set the axes through the origin

for spine in ['left', 'bottom']:

ax.spines[spine].set_position('zero')

for spine in ['right', 'top']:

ax.spines[spine].set_color('none')

ax.set(xlim=(-5, 5), ylim=(-5, 5))

x = (2, 2)

ax.annotate('', xy=x, xytext=(0, 0),

arrowprops=dict(facecolor='blue',

shrink=0,

alpha=1,

width=0.5))

ax.text(x[0] + 0.4, x[1] - 0.2, '$x$', fontsize='16')

scalars = (-2, 2)

x = np.array(x)

for s in scalars:

v = s * x

ax.annotate('', xy=v, xytext=(0, 0),

arrowprops=dict(facecolor='red',

shrink=0,

alpha=0.5,

width=0.5))

ax.text(v[0] + 0.4, v[1] - 0.2, f'${s} x$', fontsize='16')

plt.show()

In Python, a vector can be represented as a list or tuple,

such as x = [2, 4, 6] or x = (2, 4, 6).

However, it is more common to represent vectors with NumPy arrays.

One advantage of NumPy arrays is that scalar multiplication and addition have very natural syntax.

x = np.ones(3) # Vector of three ones

y = np.array((2, 4, 6)) # Converts tuple (2, 4, 6) into a NumPy array

x + y # Add (element-by-element)array([3., 5., 7.])4 * x # Scalar multiplyarray([4., 4., 4.])8.3.2Inner product and norm¶

The inner product of vectors is defined as

The norm of a vector represents its “length” (i.e., its distance from the zero vector) and is defined as

The expression can be thought of as the “distance” between and .

The inner product and norm can be computed as follows

np.sum(x*y) # Inner product of x and ynp.float64(12.0)x @ y # Another way to compute the inner productnp.float64(12.0)np.sqrt(np.sum(x**2)) # Norm of x, method onenp.float64(1.7320508075688772)np.linalg.norm(x) # Norm of x, method twonp.float64(1.7320508075688772)8.4Matrix operations¶

When we discussed linear price systems, we mentioned using matrix algebra.

Matrix algebra is similar to algebra for numbers.

Let’s review some details.

8.4.1Addition and scalar multiplication¶

Just as was the case for vectors, we can add, subtract and scalar multiply matrices.

Scalar multiplication and addition are generalizations of the vector case:

In general for a number γ and any matrix ,

In general,

In the latter case, the matrices must have the same shape in order for the definition to make sense.

8.4.2Matrix multiplication¶

We also have a convention for multiplying two matrices.

The rule for matrix multiplication generalizes the idea of inner products discussed above.

If and are two matrices, then their product is formed by taking as its -th element the inner product of the -th row of and the -th column of .

If is and is , then to multiply and we require , and the resulting matrix is .

As an important special case, consider multiplying matrix and column vector .

According to the preceding rule, this gives us an column vector.

Here is a simple illustration of multiplication of two matrices.

There are many tutorials to help you further visualize this operation, such as

- this one, or

- the discussion on the Wikipedia page.

One important special case is the identity matrix, which has ones on the principal diagonal and zero elsewhere:

It is a useful exercise to check the following:

- if is and is the identity matrix, then , and

- if is the identity matrix, then .

8.4.3Matrices in NumPy¶

NumPy arrays are also used as matrices, and have fast, efficient functions and methods for all the standard matrix operations.

You can create them manually from tuples of tuples (or lists of lists) as follows

A = ((1, 2),

(3, 4))

type(A)tupleA = np.array(A)

type(A)numpy.ndarrayA.shape(2, 2)The shape attribute is a tuple giving the number of rows and columns ---

see here

for more discussion.

To get the transpose of A, use A.transpose() or, more simply, A.T.

There are many convenient functions for creating common matrices (matrices of zeros, ones, etc.) --- see here.

Since operations are performed elementwise by default, scalar multiplication and addition have very natural syntax.

A = np.identity(3) # 3 x 3 identity matrix

B = np.ones((3, 3)) # 3 x 3 matrix of ones

2 * Aarray([[2., 0., 0.],

[0., 2., 0.],

[0., 0., 2.]])A + Barray([[2., 1., 1.],

[1., 2., 1.],

[1., 1., 2.]])To multiply matrices we use the @ symbol.

8.4.4Two good model in matrix form¶

We can now revisit the two good model and solve (3) numerically via matrix algebra.

This involves some extra steps but the method is widely applicable --- as we will see when we include more goods.

First we rewrite (1) as

Recall that is the price of two goods.

(Please check that represents the same equations as (1).)

We rewrite (2) as

Now equality of supply and demand can be expressed as , or

We can rearrange the terms to get

If all of the terms were numbers, we could solve for prices as .

Matrix algebra allows us to do something similar: we can solve for equilibrium prices using the inverse of :

Before we implement the solution let us consider a more general setting.

8.4.5More goods¶

It is natural to think about demand systems with more goods.

For example, even within energy commodities there are many different goods, including crude oil, gasoline, coal, natural gas, ethanol, and uranium.

The prices of these goods are related, so it makes sense to study them together.

Pencil and paper methods become very time consuming with large systems.

But fortunately the matrix methods described above are essentially unchanged.

In general, we can write the demand equation as , where

- is an vector of demand quantities for different goods.

- is an “coefficient” matrix.

- is an vector of constant values.

Similarly, we can write the supply equation as , where

- is an vector of supply quantities for the same goods.

- is an “coefficient” matrix.

- is an vector of constant values.

To find an equilibrium, we solve , or

Then the price vector of the n different goods is

8.4.6General linear systems¶

A more general version of the problem described above looks as follows.

The objective here is to solve for the “unknowns” .

We take as given the coefficients and constants .

Notice that we are treating a setting where the number of unknowns equals the number of equations.

This is the case where we are most likely to find a well-defined solution.

(The other cases are referred to as overdetermined and underdetermined systems of equations --- we defer discussion of these cases until later lectures.)

In matrix form, the system (27) becomes

When considering problems such as (28), we need to ask at least some of the following questions

- Does a solution actually exist?

- If a solution exists, how should we compute it?

8.5Solving systems of equations¶

Recall again the system of equations (27), which we write here again as

The problem we face is to find a vector that solves (30), taking and as given.

We may not always find a unique vector that solves (30).

We illustrate two such cases below.

8.5.1No solution¶

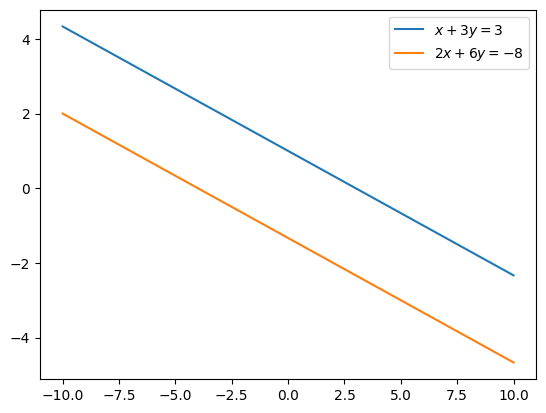

Consider the system of equations given by,

It can be verified manually that this system has no possible solution.

To illustrate why this situation arises let’s plot the two lines.

fig, ax = plt.subplots()

x = np.linspace(-10, 10)

plt.plot(x, (3-x)/3, label=f'$x + 3y = 3$')

plt.plot(x, (-8-2*x)/6, label=f'$2x + 6y = -8$')

plt.legend()

plt.show()

Clearly, these are parallel lines and hence we will never find a point such that these lines intersect.

Thus, this system has no possible solution.

We can rewrite this system in matrix form as

It can be noted that the row of matrix is just a scalar multiple of the row of matrix .

The rows of matrix in this case are called linearly dependent.

8.5.2Many solutions¶

Now consider,

Any vector such that will solve the above system.

Since we can find infinite such vectors this system has infinitely many solutions.

This is because the rows of the corresponding matrix

are linearly dependent --- can you see why?

We now impose conditions on in (30) that rule out these problems.

8.5.3Nonsingular matrices¶

To every square matrix we can assign a unique number called the determinant.

For matrices, the determinant is given by,

If the determinant of is not zero, then we say that is nonsingular.

A square matrix is nonsingular if and only if the rows and columns of are linearly independent.

A more detailed explanation of matrix inverse can be found here.

You can check yourself that the in (32) and (34) with linearly dependent rows are singular matrices.

This gives us a useful one-number summary of whether or not a square matrix can be inverted.

In particular, a square matrix has a nonzero determinant, if and only if it possesses an inverse matrix , with the property that .

As a consequence, if we pre-multiply both sides of by , we get

This is the solution to --- the solution we are looking for.

8.5.4Linear equations with NumPy¶

In the two good example we obtained the matrix equation,

where , and are given by (20) and (21).

This equation is analogous to (36) with , , and .

We can now solve for equilibrium prices with NumPy’s linalg submodule.

All of these routines are Python front ends to time-tested and highly optimized FORTRAN code.

C = ((10, 5), # Matrix C

(5, 10))Now we change this to a NumPy array.

C = np.array(C)D = ((-10, -5), # Matrix D

(-1, -10))

D = np.array(D)h = np.array((100, 50)) # Vector h

h.shape = 2,1 # Transforming h to a column vectorfrom numpy.linalg import det, inv

A = C - D

# Check that A is nonsingular (non-zero determinant), and hence invertible

det(A)np.float64(340.0000000000001)A_inv = inv(A) # compute the inverse

A_invarray([[ 0.05882353, -0.02941176],

[-0.01764706, 0.05882353]])p = A_inv @ h # equilibrium prices

parray([[4.41176471],

[1.17647059]])q = C @ p # equilibrium quantities

qarray([[50. ],

[33.82352941]])Notice that we get the same solutions as the pencil and paper case.

We can also solve for using solve(A, h) as follows.

from numpy.linalg import solve

p = solve(A, h) # equilibrium prices

parray([[4.41176471],

[1.17647059]])q = C @ p # equilibrium quantities

qarray([[50. ],

[33.82352941]])Observe how we can solve for by either via inv(A) @ y, or using solve(A, y).

The latter method uses a different algorithm that is numerically more stable and hence should be the default option.

8.6Exercises¶

Solution to Exercise 1

The generated system would be:

In matrix form we will write this as:

import numpy as np

from numpy.linalg import det

A = np.array([[35, -5, -5], # matrix A

[-5, 25, -10],

[-5, -5, 15]])

b = np.array((100, 75, 55)) # column vector b

b.shape = (3, 1)

det(A) # check if A is nonsingularnp.float64(9999.99999999999)# Using inverse

from numpy.linalg import det

A_inv = inv(A)

p = A_inv @ b

parray([[4.9625],

[7.0625],

[7.675 ]])# Using numpy.linalg.solve

from numpy.linalg import solve

p = solve(A, b)

parray([[4.9625],

[7.0625],

[7.675 ]])The solution is given by:

Solution to Exercise 2

import numpy as np

from numpy.linalg import inv# Using matrix algebra

A = np.array([[1, -9], # matrix A

[1, -7],

[1, -3]])

A_T = np.transpose(A) # transpose of matrix A

b = np.array((1, 3, 8)) # column vector b

b.shape = (3, 1)

x = inv(A_T @ A) @ A_T @ b

xarray([[11.46428571],

[ 1.17857143]])# Using numpy.linalg.lstsq

x, res, _, _ = np.linalg.lstsq(A, b, rcond=None)Source

print(f"x\u0302 = {x}")

print(f"\u2016Ax\u0302 - b\u2016\u00B2 = {res[0]}")x̂ = [[11.46428571]

[ 1.17857143]]

‖Ax̂ - b‖² = 0.07142857142857066

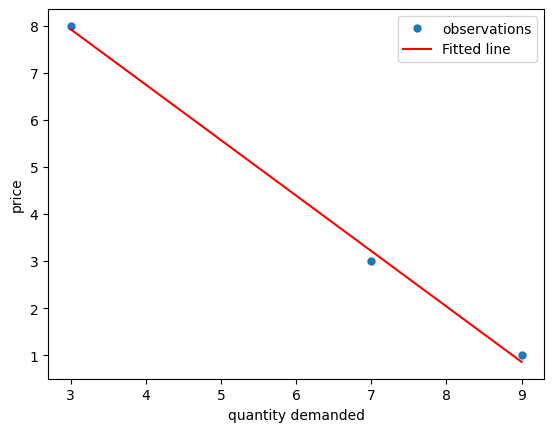

Here is a visualization of how the least squares method approximates the equation of a line connecting a set of points.

We can also describe this as “fitting” a line between a set of points.

fig, ax = plt.subplots()

p = np.array((1, 3, 8))

q = np.array((9, 7, 3))

a, b = x

ax.plot(q, p, 'o', label='observations', markersize=5)

ax.plot(q, a - b*q, 'r', label='Fitted line')

plt.xlabel('quantity demanded')

plt.ylabel('price')

plt.legend()

plt.show()

8.6.1Further reading¶

The documentation of the numpy.linalg submodule can be found here.

More advanced topics in linear algebra can be found here.

Creative Commons License – This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International.