33. Markov Chains: Basic Concepts

In addition to what’s in Anaconda, this lecture will need the following libraries:

!pip install quanteconOutput

Requirement already satisfied: quantecon in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (0.8.0)

Requirement already satisfied: numba>=0.49.0 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from quantecon) (0.61.0)

Requirement already satisfied: numpy>=1.17.0 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from quantecon) (2.1.3)

Requirement already satisfied: requests in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from quantecon) (2.32.3)

Requirement already satisfied: scipy>=1.5.0 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from quantecon) (1.15.2)

Requirement already satisfied: sympy in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from quantecon) (1.13.3)

Requirement already satisfied: llvmlite<0.45,>=0.44.0dev0 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from numba>=0.49.0->quantecon) (0.44.0)

Requirement already satisfied: charset-normalizer<4,>=2 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from requests->quantecon) (3.4.1)

Requirement already satisfied: idna<4,>=2.5 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from requests->quantecon) (3.10)

Requirement already satisfied: urllib3<3,>=1.21.1 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from requests->quantecon) (2.3.0)

Requirement already satisfied: certifi>=2017.4.17 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from requests->quantecon) (2025.1.31)

Requirement already satisfied: mpmath<1.4,>=1.1.0 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from sympy->quantecon) (1.3.0)

33.1Overview¶

Markov chains provide a way to model situations in which the past casts shadows on the future.

By this we mean that observing measurements about a present situation can help us forecast future situations.

This can be possible when there are statistical dependencies among measurements of something taken at different points of time.

For example,

- inflation next year might co-vary with inflation this year

- unemployment next month might co-vary with unemployment this month

Markov chains are a workhorse for economics and finance.

The theory of Markov chains is beautiful and provides many insights into probability and dynamics.

In this lecture, we will

- review some of the key ideas from the theory of Markov chains and

- show how Markov chains appear in some economic applications.

Let’s start with some standard imports:

import matplotlib.pyplot as plt

import quantecon as qe

import numpy as np

import networkx as nx

from matplotlib import cm

import matplotlib as mpl

from mpl_toolkits.mplot3d import Axes3D

from matplotlib.animation import FuncAnimation

from IPython.display import HTML

from matplotlib.patches import Polygon

from mpl_toolkits.mplot3d.art3d import Poly3DCollection33.2Definitions and examples¶

In this section we provide some definitions and elementary examples.

33.2.1Stochastic matrices¶

Recall that a probability mass function over possible outcomes is a nonnegative -vector that sums to one.

For example, is a probability mass function over 3 outcomes.

A stochastic matrix (or Markov matrix) is an square matrix such that each row of is a probability mass function over outcomes.

In other words,

- each element of is nonnegative, and

- each row of sums to one

If is a stochastic matrix, then so is the -th power for all .

You are asked to check this in an exercise below.

33.2.2Markov chains¶

Now we can introduce Markov chains.

Before defining a Markov chain rigorously, we’ll give some examples.

33.2.2.1Example 1¶

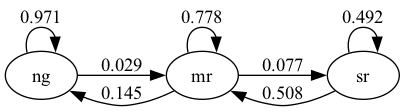

From US unemployment data, Hamilton Hamilton (2005) estimated the following dynamics.

Here there are three states

- “ng” represents normal growth

- “mr” represents mild recession

- “sr” represents severe recession

The arrows represent transition probabilities over one month.

For example, the arrow from mild recession to normal growth has 0.145 next to it.

This tells us that, according to past data, there is a 14.5% probability of transitioning from mild recession to normal growth in one month.

The arrow from normal growth back to normal growth tells us that there is a 97% probability of transitioning from normal growth to normal growth (staying in the same state).

Note that these are conditional probabilities --- the probability of transitioning from one state to another (or staying at the same one) conditional on the current state.

To make the problem easier to work with numerically, let’s convert states to numbers.

In particular, we agree that

- state 0 represents normal growth

- state 1 represents mild recession

- state 2 represents severe recession

Let record the value of the state at time .

Now we can write the statement “there is a 14.5% probability of transitioning from mild recession to normal growth in one month” as

We can collect all of these conditional probabilities into a matrix, as follows

Notice that is a stochastic matrix.

Now we have the following relationship

This holds for any between 0 and 2.

In particular, is the probability of transitioning from state to state in one month.

33.2.2.2Example 2¶

Consider a worker who, at any given time , is either unemployed (state 0) or employed (state 1).

Suppose that, over a one-month period,

- the unemployed worker finds a job with probability .

- the employed worker loses her job and becomes unemployed with probability .

Given the above information, we can write out the transition probabilities in matrix form as

For example,

Suppose we can estimate the values α and β.

Then we can address a range of questions, such as

- What is the average duration of unemployment?

- Over the long-run, what fraction of the time does a worker find herself unemployed?

- Conditional on employment, what is the probability of becoming unemployed at least once over the next 12 months?

We’ll cover some of these applications below.

33.2.2.3Example 3¶

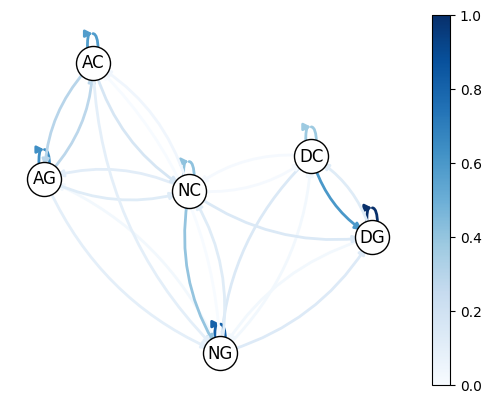

Imam and Temple Imam & Temple (2023) categorize political institutions into three types: democracy , autocracy , and an intermediate state called anocracy .

Each institution can have two potential development regimes: collapse and growth . This results in six possible states: and .

Imam and Temple Imam & Temple (2023) estimate the following transition probabilities:

nodes = ['DG', 'DC', 'NG', 'NC', 'AG', 'AC']

P = [[0.86, 0.11, 0.03, 0.00, 0.00, 0.00],

[0.52, 0.33, 0.13, 0.02, 0.00, 0.00],

[0.12, 0.03, 0.70, 0.11, 0.03, 0.01],

[0.13, 0.02, 0.35, 0.36, 0.10, 0.04],

[0.00, 0.00, 0.09, 0.11, 0.55, 0.25],

[0.00, 0.00, 0.09, 0.15, 0.26, 0.50]]Here is a visualization, with darker colors indicating higher probability.

Source

G = nx.MultiDiGraph()

for start_idx, node_start in enumerate(nodes):

for end_idx, node_end in enumerate(nodes):

value = P[start_idx][end_idx]

if value != 0:

G.add_edge(node_start,node_end, weight=value)

pos = nx.spring_layout(G, seed=10)

fig, ax = plt.subplots()

nx.draw_networkx_nodes(G, pos, node_size=600, edgecolors='black', node_color='white')

nx.draw_networkx_labels(G, pos)

arc_rad = 0.2

edges = nx.draw_networkx_edges(G, pos, ax=ax, connectionstyle=f'arc3, rad = {arc_rad}', edge_cmap=cm.Blues, width=2,

edge_color=[G[nodes[0]][nodes[1]][0]['weight'] for nodes in G.edges])

pc = mpl.collections.PatchCollection(edges, cmap=cm.Blues)

ax = plt.gca()

ax.set_axis_off()

plt.colorbar(pc, ax=ax)

plt.show()

Looking at the data, we see that democracies tend to have longer-lasting growth regimes compared to autocracies (as indicated by the lower probability of transitioning from growth to growth in autocracies).

We can also find a higher probability from collapse to growth in democratic regimes.

33.2.3Defining Markov chains¶

So far we’ve given examples of Markov chains but we haven’t defined them.

Let’s do that now.

To begin, let be a finite set with elements.

The set is called the state space and are the state values.

A distribution ψ on is a probability mass function of length , where is the amount of probability allocated to state .

A Markov chain on is a sequence of random variables taking values in that have the Markov property.

This means that, for any date and any state ,

This means that once we know the current state , adding knowledge of earlier states provides no additional information about probabilities of future states.

Thus, the dynamics of a Markov chain are fully determined by the set of conditional probabilities

By construction,

- is the probability of going from to in one unit of time (one step)

- is the conditional distribution of given

We can view as a stochastic matrix where

Going the other way, if we take a stochastic matrix , we can generate a Markov chain as follows:

- draw from a distribution on

- for each , draw from

By construction, the resulting process satisfies (8).

33.3Simulation¶

A good way to study Markov chains is to simulate them.

Let’s start by doing this ourselves and then look at libraries that can help us.

In these exercises, we’ll take the state space to be .

(We start at 0 because Python arrays are indexed from 0.)

33.3.1Writing our own simulation code¶

To simulate a Markov chain, we need

- a stochastic matrix and

- a probability mass function of length from which to draw an initial realization of .

The Markov chain is then constructed as follows:

- At time , draw a realization of from the distribution .

- At each subsequent time , draw a realization of the new state from .

(That is, draw from row of .)

To implement this simulation procedure, we need a method for generating draws from a discrete distribution.

For this task, we’ll use random.draw from QuantEcon.py.

To use random.draw, we first need to convert the probability mass function

to a cumulative distribution

ψ_0 = (0.3, 0.7) # probabilities over {0, 1}

cdf = np.cumsum(ψ_0) # convert into cumulative distribution

qe.random.draw(cdf, 5) # generate 5 independent draws from ψarray([0, 1, 1, 1, 0])We’ll write our code as a function that accepts the following three arguments

- A stochastic matrix

P. - An initial distribution

ψ_0. - A positive integer

ts_lengthrepresenting the length of the time series the function should return.

def mc_sample_path(P, ψ_0=None, ts_length=1_000):

# set up

P = np.asarray(P)

X = np.empty(ts_length, dtype=int)

# Convert each row of P into a cdf

P_dist = np.cumsum(P, axis=1) # Convert rows into cdfs

# draw initial state, defaulting to 0

if ψ_0 is not None:

X_0 = qe.random.draw(np.cumsum(ψ_0))

else:

X_0 = 0

# simulate

X[0] = X_0

for t in range(ts_length - 1):

X[t+1] = qe.random.draw(P_dist[X[t], :])

return XLet’s see how it works using the small matrix

P = [[0.4, 0.6],

[0.2, 0.8]]Here’s a short time series.

mc_sample_path(P, ψ_0=(1.0, 0.0), ts_length=10)array([0, 1, 1, 1, 0, 0, 0, 1, 1, 1])It can be shown that for a long series drawn from P, the fraction of the

sample that takes value 0 will be about 0.25.

(We will explain why later.)

Moreover, this is true regardless of the initial distribution from which is drawn.

The following code illustrates this

X = mc_sample_path(P, ψ_0=(0.1, 0.9), ts_length=1_000_000)

np.mean(X == 0)np.float64(0.250481)You can try changing the initial distribution to confirm that the output is

always close to 0.25 (for the P matrix above).

33.3.2Using QuantEcon’s routines¶

QuantEcon.py has routines for handling Markov chains, including simulation.

Here’s an illustration using the same as the preceding example

mc = qe.MarkovChain(P)

X = mc.simulate(ts_length=1_000_000)

np.mean(X == 0)np.float64(0.24936)The simulate routine is faster (because it is JIT compiled).

%time mc_sample_path(P, ts_length=1_000_000) # Our homemade code versionCPU times: user 3.03 s, sys: 12.6 ms, total: 3.04 s

Wall time: 5.76 s

array([0, 1, 1, ..., 0, 1, 1])%time mc.simulate(ts_length=1_000_000) # qe code versionCPU times: user 15 ms, sys: 5.97 ms, total: 21 ms

Wall time: 30.2 ms

array([0, 0, 1, ..., 1, 1, 1])33.3.2.1Adding state values and initial conditions¶

If we wish to, we can provide a specification of state values to MarkovChain.

These state values can be integers, floats, or even strings.

The following code illustrates

mc = qe.MarkovChain(P, state_values=('unemployed', 'employed'))

mc.simulate(ts_length=4, init='employed') # Start at employed initial statearray(['employed', 'unemployed', 'unemployed', 'employed'], dtype='<U10')mc.simulate(ts_length=4, init='unemployed') # Start at unemployed initial statearray(['unemployed', 'employed', 'unemployed', 'employed'], dtype='<U10')mc.simulate(ts_length=4) # Start at randomly chosen initial statearray(['employed', 'employed', 'employed', 'employed'], dtype='<U10')If we want to see indices rather than state values as outputs as we can use

mc.simulate_indices(ts_length=4)array([1, 1, 1, 1])33.4Distributions over time¶

We learned that

- is a Markov chain with stochastic matrix

- the distribution of is known to be

What then is the distribution of , or, more generally, of ?

To answer this, we let be the distribution of for .

Our first aim is to find given and .

To begin, pick any .

To get the probability of being at tomorrow (at ), we account for all ways this can happen and sum their probabilities.

This leads to

(We are using the law of total probability.)

Rewriting this statement in terms of marginal and conditional probabilities gives

There are such equations, one for each .

If we think of and as row vectors, these equations are summarized by the matrix expression

Thus, we postmultiply by to move a distribution forward one unit of time.

By postmultiplying times, we move a distribution forward steps into the future.

Hence, iterating on (12), the expression is also valid --- here is the -th power of .

As a special case, we see that if is the initial distribution from which is drawn, then is the distribution of .

This is very important, so let’s repeat it

The general rule is that postmultiplying a distribution by shifts it forward units of time.

Hence the following is also valid.

33.4.1Multiple step transition probabilities¶

We know that the probability of transitioning from to in one step is .

It turns out that the probability of transitioning from to in steps is , the -th element of the -th power of .

To see why, consider again (14), but now with a that puts all probability on state .

Then is a vector with 1 in position and zero elsewhere.

Inserting this into (14), we see that, conditional on , the distribution of is the -th row of .

In particular

33.4.2Example: probability of recession¶

Recall the stochastic matrix for recession and growth considered above.

Suppose that the current state is unknown --- perhaps statistics are available only at the end of the current month.

We guess that the probability that the economy is in state is at time t.

The probability of being in recession (either mild or severe) in 6 months time is given by

33.4.3Example 2: cross-sectional distributions¶

The distributions we have been studying can be viewed either

- as probabilities or

- as cross-sectional frequencies that the law of large numbers leads us to anticipate for large samples.

To illustrate, recall our model of employment/unemployment dynamics for a given worker discussed above.

Consider a large population of workers, each of whose lifetime experience is described by the specified dynamics, with each worker’s outcomes being realizations of processes that are statistically independent of all other workers’ processes.

Let be the current cross-sectional distribution over .

The cross-sectional distribution records fractions of workers employed and unemployed at a given moment .

- For example, is the unemployment rate at time .

What will the cross-sectional distribution be in 10 periods hence?

The answer is , where is the stochastic matrix in (4).

This is because each worker’s state evolves according to , so is a marginal distribution for a single randomly selected worker.

But when the sample is large, outcomes and probabilities are roughly equal (by an application of the law of large numbers).

So for a very large (tending to infinite) population, also represents fractions of workers in each state.

This is exactly the cross-sectional distribution.

33.5Stationary distributions¶

As seen in (12), we can shift a distribution forward one unit of time via postmultiplication by .

Some distributions are invariant under this updating process --- for example,

P = np.array([[0.4, 0.6],

[0.2, 0.8]])

ψ = (0.25, 0.75)

ψ @ Parray([0.25, 0.75])Notice that ψ @ P is the same as ψ.

Such distributions are called stationary or invariant.

Formally, a distribution on is called stationary for if .

Notice that, postmultiplying by , we have .

Continuing in the same way leads to for all .

This tells us an important fact: If the distribution of is a stationary distribution, then will have this same distribution for all .

The following theorem is proved in Chapter 4 of Sargent & Stachurski (2023) and numerous other sources.

Note that there can be many stationary distributions corresponding to a given stochastic matrix .

- For example, if is the identity matrix, then all distributions on are stationary.

To get uniqueness, we need the Markov chain to “mix around,” so that the state doesn’t get stuck in some part of the state space.

This gives some intuition for the following theorem.

We will come back to this when we introduce irreducibility in the next lecture on Markov chains.

33.5.1Example¶

Recall our model of the employment/unemployment dynamics of a particular worker discussed above.

If and , then the transition matrix is everywhere positive.

Let be the stationary distribution, so that corresponds to unemployment (state 0).

Using and a bit of algebra yields

This is, in some sense, a steady state probability of unemployment.

Not surprisingly it tends to zero as , and to one as .

33.5.2Calculating stationary distributions¶

A stable algorithm for computing stationary distributions is implemented in QuantEcon.py.

Here’s an example

P = [[0.4, 0.6],

[0.2, 0.8]]

mc = qe.MarkovChain(P)

mc.stationary_distributions # Show all stationary distributionsarray([[0.25, 0.75]])33.5.3Asymptotic stationarity¶

Consider an everywhere positive stochastic matrix with unique stationary distribution .

Sometimes the distribution of converges to regardless of .

For example, we have the following result

This situation is often referred to as asymptotic stationarity or global stability.

A proof of the theorem can be found in Chapter 4 of Sargent & Stachurski (2023), as well as many other sources.

33.5.3.1Example: Hamilton’s chain¶

Hamilton’s chain satisfies the conditions of the theorem because is everywhere positive:

P = np.array([[0.971, 0.029, 0.000],

[0.145, 0.778, 0.077],

[0.000, 0.508, 0.492]])

P @ Parray([[0.947046, 0.050721, 0.002233],

[0.253605, 0.648605, 0.09779 ],

[0.07366 , 0.64516 , 0.28118 ]])Let’s pick an initial distribution and trace out the sequence of distributions for , for .

First, we write a function to iterate the sequence of distributions for ts_length period

def iterate_ψ(ψ_0, P, ts_length):

n = len(P)

ψ_t = np.empty((ts_length, n))

ψ_t[0 ]= ψ_0

for t in range(1, ts_length):

ψ_t[t] = ψ_t[t-1] @ P

return ψ_tNow we plot the sequence

Source

ψ_1 = (0.0, 0.0, 1.0)

ψ_2 = (1.0, 0.0, 0.0)

ψ_3 = (0.0, 1.0, 0.0) # Three initial conditions

colors = ['blue','red', 'green'] # Different colors for each initial point

# Define the vertices of the unit simplex

v = np.array([[1, 0, 0], [0, 1, 0], [0, 0, 1], [0, 0, 0]])

# Define the faces of the unit simplex

faces = [

[v[0], v[1], v[2]],

[v[0], v[1], v[3]],

[v[0], v[2], v[3]],

[v[1], v[2], v[3]]

]

fig = plt.figure()

ax = fig.add_subplot(projection='3d')

def update(n):

ax.clear()

ax.set_xlim([0, 1])

ax.set_ylim([0, 1])

ax.set_zlim([0, 1])

ax.view_init(45, 45)

simplex = Poly3DCollection(faces, alpha=0.03)

ax.add_collection3d(simplex)

for idx, ψ_0 in enumerate([ψ_1, ψ_2, ψ_3]):

ψ_t = iterate_ψ(ψ_0, P, n+1)

for i, point in enumerate(ψ_t):

ax.scatter(point[0], point[1], point[2], color=colors[idx], s=60, alpha=(i+1)/len(ψ_t))

mc = qe.MarkovChain(P)

ψ_star = mc.stationary_distributions[0]

ax.scatter(ψ_star[0], ψ_star[1], ψ_star[2], c='yellow', s=60)

return fig,

anim = FuncAnimation(fig, update, frames=range(20), blit=False, repeat=False)

plt.close()

HTML(anim.to_jshtml())Here

- is the stochastic matrix for recession and growth considered above.

- The red, blue and green dots are initial marginal probability distributions , each of which is represented as a vector in .

- The transparent dots are the marginal distributions for , for .

- The yellow dot is .

You might like to try experimenting with different initial conditions.

33.5.3.2Example: failure of convergence¶

Consider the periodic chain with stochastic matrix

This matrix does not satisfy the conditions of Theorem 3 because, as you can readily check,

- when is odd and

- , the identity matrix, when is even.

Hence there is no such that all elements of are strictly positive.

Moreover, we can see that global stability does not hold.

For instance, if we start at , then is when is even and when is odd.

We can see similar phenomena in higher dimensions.

The next figure illustrates this for a periodic Markov chain with three states.

Source

ψ_1 = (0.0, 0.0, 1.0)

ψ_2 = (0.5, 0.5, 0.0)

ψ_3 = (0.25, 0.25, 0.5)

ψ_4 = (1/3, 1/3, 1/3)

P = np.array([[0.0, 1.0, 0.0],

[0.0, 0.0, 1.0],

[1.0, 0.0, 0.0]])

fig = plt.figure()

ax = fig.add_subplot(projection='3d')

colors = ['red','yellow', 'green', 'blue'] # Different colors for each initial point

# Define the vertices of the unit simplex

v = np.array([[1, 0, 0], [0, 1, 0], [0, 0, 1], [0, 0, 0]])

# Define the faces of the unit simplex

faces = [

[v[0], v[1], v[2]],

[v[0], v[1], v[3]],

[v[0], v[2], v[3]],

[v[1], v[2], v[3]]

]

def update(n):

ax.clear()

ax.set_xlim([0, 1])

ax.set_ylim([0, 1])

ax.set_zlim([0, 1])

ax.view_init(45, 45)

# Plot the 3D unit simplex as planes

simplex = Poly3DCollection(faces,alpha=0.05)

ax.add_collection3d(simplex)

for idx, ψ_0 in enumerate([ψ_1, ψ_2, ψ_3, ψ_4]):

ψ_t = iterate_ψ(ψ_0, P, n+1)

point = ψ_t[-1]

ax.scatter(point[0], point[1], point[2], color=colors[idx], s=60)

points = np.array(ψ_t)

ax.plot(points[:, 0], points[:, 1], points[:, 2], color=colors[idx],linewidth=0.75)

return fig,

anim = FuncAnimation(fig, update, frames=range(20), blit=False, repeat=False)

plt.close()

HTML(anim.to_jshtml())This animation demonstrates the behavior of an irreducible and periodic stochastic matrix.

The red, yellow, and green dots represent different initial probability distributions.

The blue dot represents the unique stationary distribution.

Unlike Hamilton’s Markov chain, these initial distributions do not converge to the unique stationary distribution.

Instead, they cycle periodically around the probability simplex, illustrating that asymptotic stability fails.

33.6Computing expectations¶

We sometimes want to compute mathematical expectations of functions of of the form

and conditional expectations such as

where

- is a Markov chain generated by stochastic matrix .

- is a given function, which, in terms of matrix algebra, we’ll think of as the column vector

Computing the unconditional expectation (20) is easy.

We just sum over the marginal distribution of to get

Here ψ is the distribution of .

Since ψ and hence are row vectors, we can also write this as

For the conditional expectation (21), we need to sum over the conditional distribution of given .

We already know that this is , so

33.6.1Expectations of geometric sums¶

Sometimes we want to compute the mathematical expectation of a geometric sum, such as .

In view of the preceding discussion, this is

By the Neumann series lemma, this sum can be calculated using

The vector stores the conditional expectation over all .

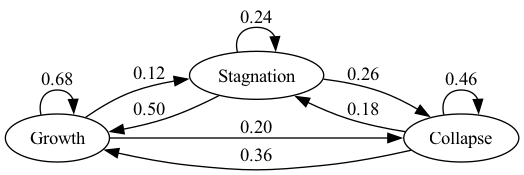

Solution to Exercise 1

Solution 1:

Since the matrix is everywhere positive, there is a unique stationary distribution such that as .

Solution 2:

One simple way to calculate the stationary distribution is to take the power of the transition matrix as we have shown before

P = np.array([[0.68, 0.12, 0.20],

[0.50, 0.24, 0.26],

[0.36, 0.18, 0.46]])

P_power = np.linalg.matrix_power(P, 20)

P_powerarray([[0.56145769, 0.15565164, 0.28289067],

[0.56145769, 0.15565164, 0.28289067],

[0.56145769, 0.15565164, 0.28289067]])Note that rows of the transition matrix converge to the stationary distribution.

ψ_star_p = P_power[0]

ψ_star_parray([0.56145769, 0.15565164, 0.28289067])mc = qe.MarkovChain(P)

ψ_star = mc.stationary_distributions[0]

ψ_stararray([0.56145769, 0.15565164, 0.28289067])Solution to Exercise 2

Solution 1:

Although is not every positive, when is everywhere positive.

P = np.array([[0.86, 0.11, 0.03, 0.00, 0.00, 0.00],

[0.52, 0.33, 0.13, 0.02, 0.00, 0.00],

[0.12, 0.03, 0.70, 0.11, 0.03, 0.01],

[0.13, 0.02, 0.35, 0.36, 0.10, 0.04],

[0.00, 0.00, 0.09, 0.11, 0.55, 0.25],

[0.00, 0.00, 0.09, 0.15, 0.26, 0.50]])

np.linalg.matrix_power(P,3)array([[0.764927, 0.133481, 0.085949, 0.011481, 0.002956, 0.001206],

[0.658861, 0.131559, 0.161367, 0.031703, 0.011296, 0.005214],

[0.291394, 0.057788, 0.439702, 0.113408, 0.062707, 0.035001],

[0.272459, 0.051361, 0.365075, 0.132207, 0.108152, 0.070746],

[0.064129, 0.012533, 0.232875, 0.154385, 0.299243, 0.236835],

[0.072865, 0.014081, 0.244139, 0.160905, 0.265846, 0.242164]])So it satisfies the requirement.

Solution 2:

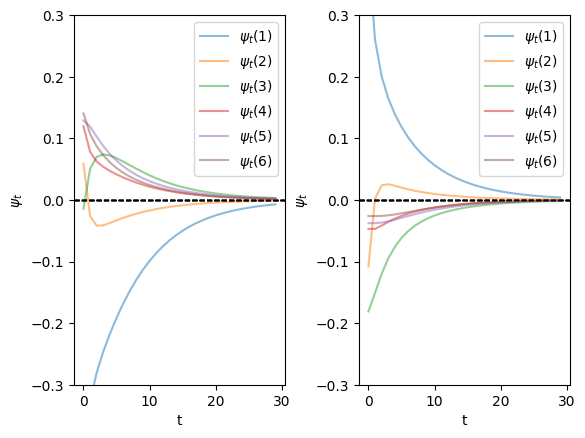

We find the distribution ψ converges to the stationary distribution quickly regardless of the initial distributions

ts_length = 30

num_distributions = 20

nodes = ['DG', 'DC', 'NG', 'NC', 'AG', 'AC']

# Get parameters of transition matrix

n = len(P)

mc = qe.MarkovChain(P)

ψ_star = mc.stationary_distributions[0]

ψ_0 = np.array([[1/6 for i in range(6)],

[0 if i != 0 else 1 for i in range(6)]])

## Draw the plot

fig, axes = plt.subplots(ncols=2)

plt.subplots_adjust(wspace=0.35)

for idx in range(2):

ψ_t = iterate_ψ(ψ_0[idx], P, ts_length)

for i in range(n):

axes[idx].plot(ψ_t[:, i] - ψ_star[i], alpha=0.5, label=fr'$\psi_t({i+1})$')

axes[idx].set_ylim([-0.3, 0.3])

axes[idx].set_xlabel('t')

axes[idx].set_ylabel(fr'$\psi_t$')

axes[idx].legend()

axes[idx].axhline(0, linestyle='dashed', lw=1, color = 'black')

plt.show()

Solution to Exercise 3

Suppose that is stochastic and, moreover, that is stochastic for some integer .

We will prove that is also stochastic.

(We are doing proof by induction --- we assume the claim is true at and now prove it is true at .)

To see this, observe that, since is stochastic and the product of nonnegative matrices is nonnegative, is nonnegative.

Also, if is a column vector of ones, then, since is stochastic we have (rows sum to one).

Therefore

The proof is done.

- Hamilton, J. D. (2005). What’s real about the business cycle? Federal Reserve Bank of St. Louis Review, July-August, 435–452.

- Imam, P., & Temple, J. R. (2023). Political Institutions and Output Collapses. IMF Working Paper.

- Sargent, T. J., & Stachurski, J. (2023). Economic Networks: Theory and Computation. arXiv Preprint arXiv:2203.11972.

Creative Commons License – This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International.