18. Distributions and Probabilities

18.1Outline¶

In this lecture we give a quick introduction to data and probability distributions using Python.

!pip install --upgrade yfinanceOutput

Requirement already satisfied: yfinance in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (0.2.53)

Requirement already satisfied: pandas>=1.3.0 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from yfinance) (2.2.3)

Requirement already satisfied: numpy>=1.16.5 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from yfinance) (2.1.3)

Requirement already satisfied: requests>=2.31 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from yfinance) (2.32.3)

Requirement already satisfied: multitasking>=0.0.7 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from yfinance) (0.0.11)

Requirement already satisfied: platformdirs>=2.0.0 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from yfinance) (4.3.6)

Requirement already satisfied: pytz>=2022.5 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from yfinance) (2025.1)

Requirement already satisfied: frozendict>=2.3.4 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from yfinance) (2.4.6)

Requirement already satisfied: peewee>=3.16.2 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from yfinance) (3.17.9)

Requirement already satisfied: beautifulsoup4>=4.11.1 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from yfinance) (4.13.3)

Requirement already satisfied: soupsieve>1.2 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from beautifulsoup4>=4.11.1->yfinance) (2.6)

Requirement already satisfied: typing-extensions>=4.0.0 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from beautifulsoup4>=4.11.1->yfinance) (4.12.2)

Requirement already satisfied: python-dateutil>=2.8.2 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from pandas>=1.3.0->yfinance) (2.9.0.post0)

Requirement already satisfied: tzdata>=2022.7 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from pandas>=1.3.0->yfinance) (2025.1)

Requirement already satisfied: charset-normalizer<4,>=2 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from requests>=2.31->yfinance) (3.4.1)

Requirement already satisfied: idna<4,>=2.5 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from requests>=2.31->yfinance) (3.10)

Requirement already satisfied: urllib3<3,>=1.21.1 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from requests>=2.31->yfinance) (2.3.0)

Requirement already satisfied: certifi>=2017.4.17 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from requests>=2.31->yfinance) (2025.1.31)

Requirement already satisfied: six>=1.5 in /opt/hostedtoolcache/Python/3.12.9/x64/lib/python3.12/site-packages (from python-dateutil>=2.8.2->pandas>=1.3.0->yfinance) (1.17.0)

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

import yfinance as yf

import scipy.stats

import seaborn as sns18.2Common distributions¶

In this section we recall the definitions of some well-known distributions and explore how to manipulate them with SciPy.

18.2.1Discrete distributions¶

Let’s start with discrete distributions.

A discrete distribution is defined by a set of numbers and a probability mass function (PMF) on , which is a function from to with the property

We say that a random variable has distribution if takes value with probability .

That is,

The mean or expected value of a random variable with distribution is

Expectation is also called the first moment of the distribution.

We also refer to this number as the mean of the distribution (represented by) .

The variance of is defined as

Variance is also called the second central moment of the distribution.

The cumulative distribution function (CDF) of is defined by

Here if “statement” is true and zero otherwise.

Hence the second term takes all and sums their probabilities.

18.2.1.1Uniform distribution¶

One simple example is the uniform distribution, where for all .

We can import the uniform distribution on from SciPy like so:

n = 10

u = scipy.stats.randint(1, n+1)Here’s the mean and variance:

u.mean(), u.var()(np.float64(5.5), np.float64(8.25))The formula for the mean is , and the formula for the variance is .

Now let’s evaluate the PMF:

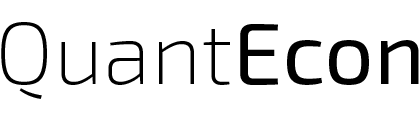

u.pmf(1)np.float64(0.1)u.pmf(2)np.float64(0.1)Here’s a plot of the probability mass function:

fig, ax = plt.subplots()

S = np.arange(1, n+1)

ax.plot(S, u.pmf(S), linestyle='', marker='o', alpha=0.8, ms=4)

ax.vlines(S, 0, u.pmf(S), lw=0.2)

ax.set_xticks(S)

ax.set_xlabel('S')

ax.set_ylabel('PMF')

plt.show()

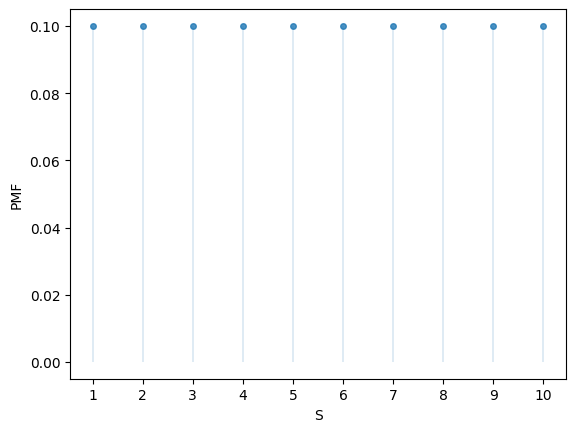

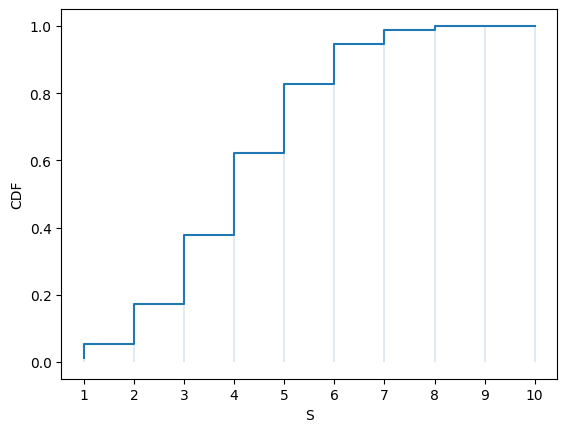

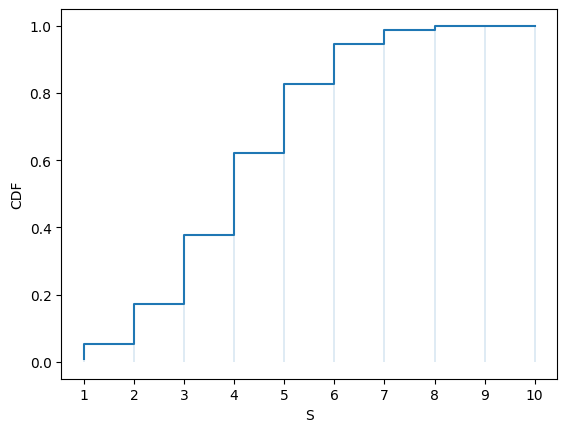

Here’s a plot of the CDF:

fig, ax = plt.subplots()

S = np.arange(1, n+1)

ax.step(S, u.cdf(S))

ax.vlines(S, 0, u.cdf(S), lw=0.2)

ax.set_xticks(S)

ax.set_xlabel('S')

ax.set_ylabel('CDF')

plt.show()

The CDF jumps up by at .

18.2.1.2Bernoulli distribution¶

Another useful distribution is the Bernoulli distribution on , which has PMF:

Here is a parameter.

We can think of this distribution as modeling probabilities for a random trial with success probability θ.

- means that the trial succeeds (takes value 1) with probability θ

- means that the trial fails (takes value 0) with probability

The formula for the mean is θ, and the formula for the variance is .

We can import the Bernoulli distribution on from SciPy like so:

θ = 0.4

u = scipy.stats.bernoulli(θ)Here’s the mean and variance at

u.mean(), u.var()(np.float64(0.4), np.float64(0.24))We can evaluate the PMF as follows

u.pmf(0), u.pmf(1)(np.float64(0.6), np.float64(0.4))18.2.1.3Binomial distribution¶

Another useful (and more interesting) distribution is the binomial distribution on , which has PMF:

Again, is a parameter.

The interpretation of is: the probability of successes in independent trials with success probability θ.

For example, if , then is the probability of heads in flips of a fair coin.

The formula for the mean is and the formula for the variance is .

Let’s investigate an example

n = 10

θ = 0.5

u = scipy.stats.binom(n, θ)According to our formulas, the mean and variance are

n * θ, n * θ * (1 - θ)(5.0, 2.5)Let’s see if SciPy gives us the same results:

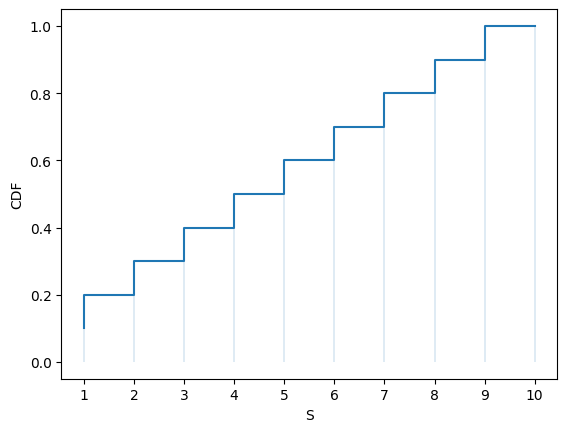

u.mean(), u.var()(np.float64(5.0), np.float64(2.5))Here’s the PMF:

u.pmf(1)np.float64(0.009765625000000002)fig, ax = plt.subplots()

S = np.arange(1, n+1)

ax.plot(S, u.pmf(S), linestyle='', marker='o', alpha=0.8, ms=4)

ax.vlines(S, 0, u.pmf(S), lw=0.2)

ax.set_xticks(S)

ax.set_xlabel('S')

ax.set_ylabel('PMF')

plt.show()

Here’s the CDF:

fig, ax = plt.subplots()

S = np.arange(1, n+1)

ax.step(S, u.cdf(S))

ax.vlines(S, 0, u.cdf(S), lw=0.2)

ax.set_xticks(S)

ax.set_xlabel('S')

ax.set_ylabel('CDF')

plt.show()

Solution to Exercise 2

Here is one solution:

fig, ax = plt.subplots()

S = np.arange(1, n+1)

u_sum = np.cumsum(u.pmf(S))

ax.step(S, u_sum)

ax.vlines(S, 0, u_sum, lw=0.2)

ax.set_xticks(S)

ax.set_xlabel('S')

ax.set_ylabel('CDF')

plt.show()

We can see that the output graph is the same as the one above.

18.2.1.4Geometric distribution¶

The geometric distribution has infinite support and its PMF is given by

where is a parameter

(A discrete distribution has infinite support if the set of points to which it assigns positive probability is infinite.)

To understand the distribution, think of repeated independent random trials, each with success probability θ.

The interpretation of is: the probability there are failures before the first success occurs.

It can be shown that the mean of the distribution is and the variance is .

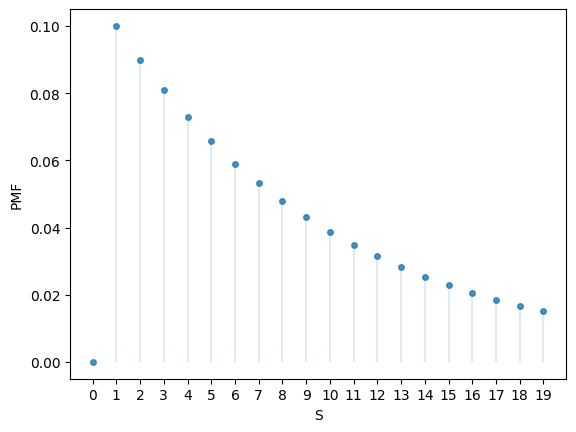

Here’s an example.

θ = 0.1

u = scipy.stats.geom(θ)

u.mean(), u.var()(np.float64(10.0), np.float64(90.0))Here’s part of the PMF:

fig, ax = plt.subplots()

n = 20

S = np.arange(n)

ax.plot(S, u.pmf(S), linestyle='', marker='o', alpha=0.8, ms=4)

ax.vlines(S, 0, u.pmf(S), lw=0.2)

ax.set_xticks(S)

ax.set_xlabel('S')

ax.set_ylabel('PMF')

plt.show()

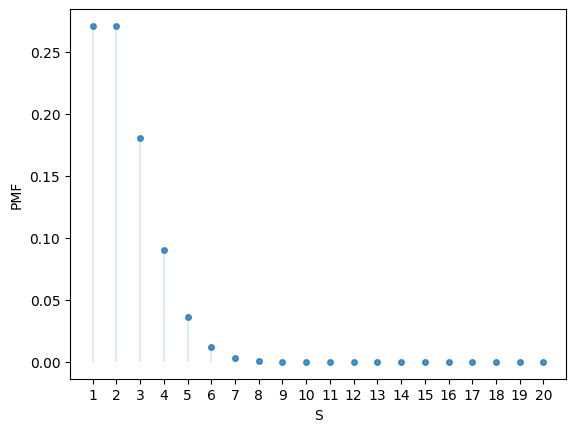

18.2.1.5Poisson distribution¶

The Poisson distribution on with parameter has PMF

The interpretation of is: the probability of events in a fixed time interval, where the events occur independently at a constant rate λ.

It can be shown that the mean is λ and the variance is also λ.

Here’s an example.

λ = 2

u = scipy.stats.poisson(λ)

u.mean(), u.var()(np.float64(2.0), np.float64(2.0))Here’s the PMF:

u.pmf(1)np.float64(0.2706705664732254)fig, ax = plt.subplots()

S = np.arange(1, n+1)

ax.plot(S, u.pmf(S), linestyle='', marker='o', alpha=0.8, ms=4)

ax.vlines(S, 0, u.pmf(S), lw=0.2)

ax.set_xticks(S)

ax.set_xlabel('S')

ax.set_ylabel('PMF')

plt.show()

18.2.2Continuous distributions¶

A continuous distribution is represented by a probability density function, which is a function over (the set of all real numbers) such that for all and

We say that random variable has distribution if

for all .

The definition of the mean and variance of a random variable with distribution are the same as the discrete case, after replacing the sum with an integral.

For example, the mean of is

The cumulative distribution function (CDF) of is defined by

18.2.2.1Normal distribution¶

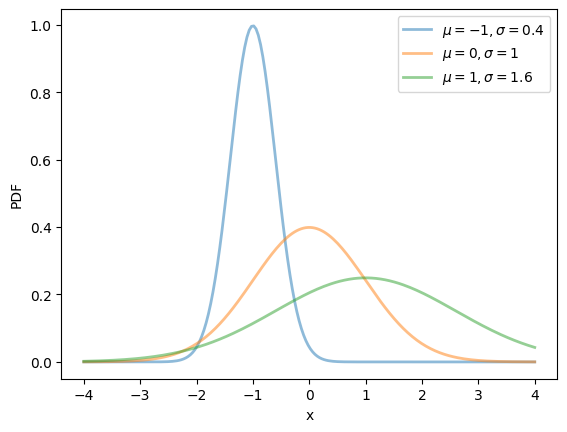

Perhaps the most famous distribution is the normal distribution, which has density

This distribution has two parameters, and .

Using calculus, it can be shown that, for this distribution, the mean is μ and the variance is .

We can obtain the moments, PDF and CDF of the normal density via SciPy as follows:

μ, σ = 0.0, 1.0

u = scipy.stats.norm(μ, σ)u.mean(), u.var()(np.float64(0.0), np.float64(1.0))Here’s a plot of the density --- the famous “bell-shaped curve”:

μ_vals = [-1, 0, 1]

σ_vals = [0.4, 1, 1.6]

fig, ax = plt.subplots()

x_grid = np.linspace(-4, 4, 200)

for μ, σ in zip(μ_vals, σ_vals):

u = scipy.stats.norm(μ, σ)

ax.plot(x_grid, u.pdf(x_grid),

alpha=0.5, lw=2,

label=rf'$\mu={μ}, \sigma={σ}$')

ax.set_xlabel('x')

ax.set_ylabel('PDF')

plt.legend()

plt.show()

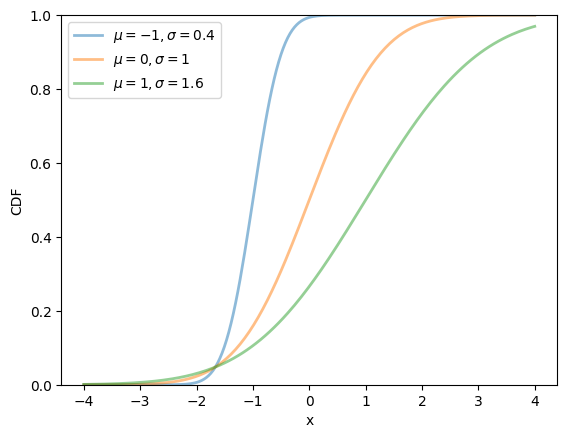

Here’s a plot of the CDF:

fig, ax = plt.subplots()

for μ, σ in zip(μ_vals, σ_vals):

u = scipy.stats.norm(μ, σ)

ax.plot(x_grid, u.cdf(x_grid),

alpha=0.5, lw=2,

label=rf'$\mu={μ}, \sigma={σ}$')

ax.set_ylim(0, 1)

ax.set_xlabel('x')

ax.set_ylabel('CDF')

plt.legend()

plt.show()

18.2.2.2Lognormal distribution¶

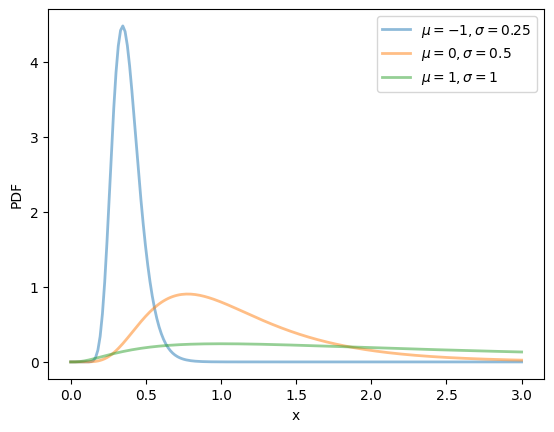

The lognormal distribution is a distribution on with density

This distribution has two parameters, μ and σ.

It can be shown that, for this distribution, the mean is and the variance is .

It can be proved that

- if is lognormally distributed, then is normally distributed, and

- if is normally distributed, then is lognormally distributed.

We can obtain the moments, PDF, and CDF of the lognormal density as follows:

μ, σ = 0.0, 1.0

u = scipy.stats.lognorm(s=σ, scale=np.exp(μ))u.mean(), u.var()(np.float64(1.6487212707001282), np.float64(4.670774270471604))μ_vals = [-1, 0, 1]

σ_vals = [0.25, 0.5, 1]

x_grid = np.linspace(0, 3, 200)

fig, ax = plt.subplots()

for μ, σ in zip(μ_vals, σ_vals):

u = scipy.stats.lognorm(σ, scale=np.exp(μ))

ax.plot(x_grid, u.pdf(x_grid),

alpha=0.5, lw=2,

label=fr'$\mu={μ}, \sigma={σ}$')

ax.set_xlabel('x')

ax.set_ylabel('PDF')

plt.legend()

plt.show()

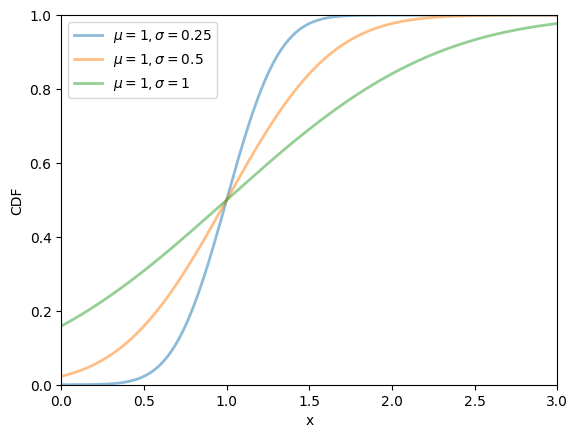

fig, ax = plt.subplots()

μ = 1

for σ in σ_vals:

u = scipy.stats.norm(μ, σ)

ax.plot(x_grid, u.cdf(x_grid),

alpha=0.5, lw=2,

label=rf'$\mu={μ}, \sigma={σ}$')

ax.set_ylim(0, 1)

ax.set_xlim(0, 3)

ax.set_xlabel('x')

ax.set_ylabel('CDF')

plt.legend()

plt.show()

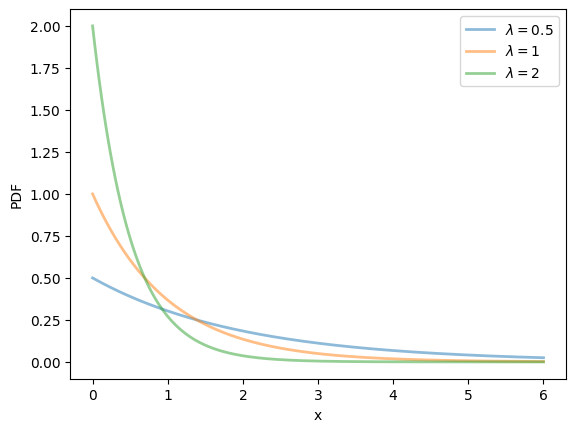

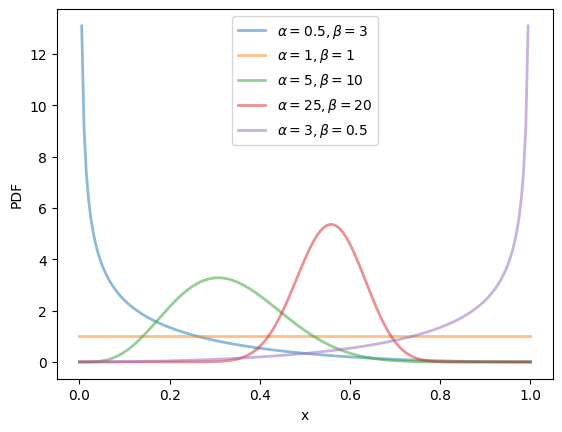

18.2.2.3Exponential distribution¶

The exponential distribution is a distribution supported on with density

This distribution has one parameter λ.

The exponential distribution can be thought of as the continuous analog of the geometric distribution.

It can be shown that, for this distribution, the mean is and the variance is .

We can obtain the moments, PDF, and CDF of the exponential density as follows:

λ = 1.0

u = scipy.stats.expon(scale=1/λ)u.mean(), u.var()(np.float64(1.0), np.float64(1.0))fig, ax = plt.subplots()

λ_vals = [0.5, 1, 2]

x_grid = np.linspace(0, 6, 200)

for λ in λ_vals:

u = scipy.stats.expon(scale=1/λ)

ax.plot(x_grid, u.pdf(x_grid),

alpha=0.5, lw=2,

label=rf'$\lambda={λ}$')

ax.set_xlabel('x')

ax.set_ylabel('PDF')

plt.legend()

plt.show()

fig, ax = plt.subplots()

for λ in λ_vals:

u = scipy.stats.expon(scale=1/λ)

ax.plot(x_grid, u.cdf(x_grid),

alpha=0.5, lw=2,

label=rf'$\lambda={λ}$')

ax.set_ylim(0, 1)

ax.set_xlabel('x')

ax.set_ylabel('CDF')

plt.legend()

plt.show()

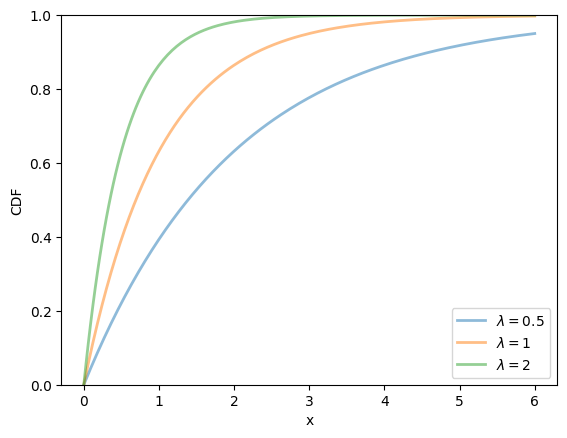

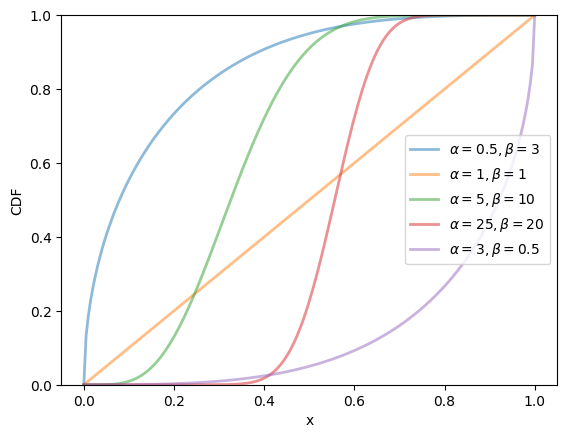

18.2.2.4Beta distribution¶

The beta distribution is a distribution on with density

where Γ is the gamma function.

(The role of the gamma function is just to normalize the density, so that it integrates to one.)

This distribution has two parameters, and .

It can be shown that, for this distribution, the mean is and the variance is .

We can obtain the moments, PDF, and CDF of the Beta density as follows:

α, β = 3.0, 1.0

u = scipy.stats.beta(α, β)u.mean(), u.var()(np.float64(0.75), np.float64(0.0375))α_vals = [0.5, 1, 5, 25, 3]

β_vals = [3, 1, 10, 20, 0.5]

x_grid = np.linspace(0, 1, 200)

fig, ax = plt.subplots()

for α, β in zip(α_vals, β_vals):

u = scipy.stats.beta(α, β)

ax.plot(x_grid, u.pdf(x_grid),

alpha=0.5, lw=2,

label=rf'$\alpha={α}, \beta={β}$')

ax.set_xlabel('x')

ax.set_ylabel('PDF')

plt.legend()

plt.show()

fig, ax = plt.subplots()

for α, β in zip(α_vals, β_vals):

u = scipy.stats.beta(α, β)

ax.plot(x_grid, u.cdf(x_grid),

alpha=0.5, lw=2,

label=rf'$\alpha={α}, \beta={β}$')

ax.set_ylim(0, 1)

ax.set_xlabel('x')

ax.set_ylabel('CDF')

plt.legend()

plt.show()

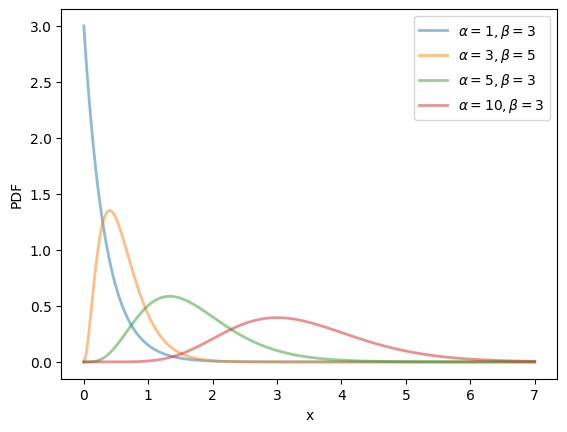

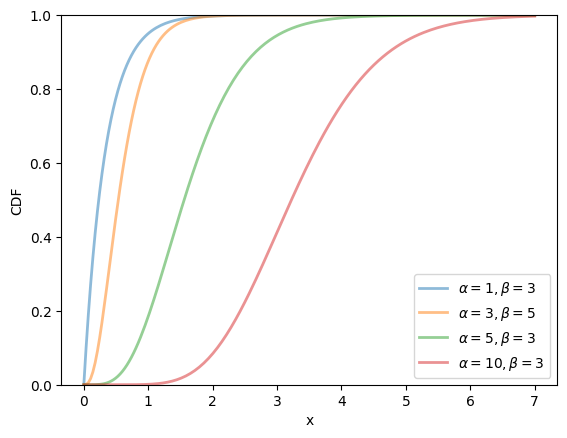

18.2.2.5Gamma distribution¶

The gamma distribution is a distribution on with density

This distribution has two parameters, and .

It can be shown that, for this distribution, the mean is and the variance is .

One interpretation is that if is gamma distributed and α is an integer, then is the sum of α independent exponentially distributed random variables with mean .

We can obtain the moments, PDF, and CDF of the Gamma density as follows:

α, β = 3.0, 2.0

u = scipy.stats.gamma(α, scale=1/β)u.mean(), u.var()(np.float64(1.5), np.float64(0.75))α_vals = [1, 3, 5, 10]

β_vals = [3, 5, 3, 3]

x_grid = np.linspace(0, 7, 200)

fig, ax = plt.subplots()

for α, β in zip(α_vals, β_vals):

u = scipy.stats.gamma(α, scale=1/β)

ax.plot(x_grid, u.pdf(x_grid),

alpha=0.5, lw=2,

label=rf'$\alpha={α}, \beta={β}$')

ax.set_xlabel('x')

ax.set_ylabel('PDF')

plt.legend()

plt.show()

fig, ax = plt.subplots()

for α, β in zip(α_vals, β_vals):

u = scipy.stats.gamma(α, scale=1/β)

ax.plot(x_grid, u.cdf(x_grid),

alpha=0.5, lw=2,

label=rf'$\alpha={α}, \beta={β}$')

ax.set_ylim(0, 1)

ax.set_xlabel('x')

ax.set_ylabel('CDF')

plt.legend()

plt.show()

18.3Observed distributions¶

Sometimes we refer to observed data or measurements as “distributions”.

For example, let’s say we observe the income of 10 people over a year:

data = [['Hiroshi', 1200],

['Ako', 1210],

['Emi', 1400],

['Daiki', 990],

['Chiyo', 1530],

['Taka', 1210],

['Katsuhiko', 1240],

['Daisuke', 1124],

['Yoshi', 1330],

['Rie', 1340]]

df = pd.DataFrame(data, columns=['name', 'income'])

dfIn this situation, we might refer to the set of their incomes as the “income distribution.”

The terminology is confusing because this set is not a probability distribution --- it’s just a collection of numbers.

However, as we will see, there are connections between observed distributions (i.e., sets of numbers like the income distribution above) and probability distributions.

Below we explore some observed distributions.

18.3.1Summary statistics¶

Suppose we have an observed distribution with values

The sample mean of this distribution is defined as

The sample variance is defined as

For the income distribution given above, we can calculate these numbers via

x = df['income']

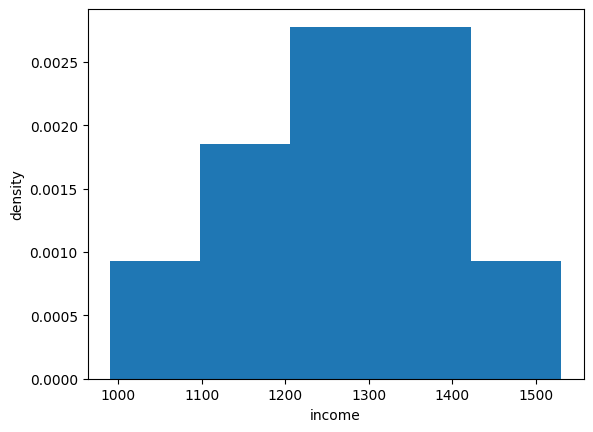

x.mean(), x.var()(np.float64(1257.4), np.float64(22680.93333333333))18.3.2Visualization¶

Let’s look at different ways that we can visualize one or more observed distributions.

We will cover

- histograms

- kernel density estimates and

- violin plots

18.3.2.1Histograms¶

We can histogram the income distribution we just constructed as follows

fig, ax = plt.subplots()

ax.hist(x, bins=5, density=True, histtype='bar')

ax.set_xlabel('income')

ax.set_ylabel('density')

plt.show()

Let’s look at a distribution from real data.

In particular, we will look at the monthly return on Amazon shares between 2000/1/1 and 2024/1/1.

The monthly return is calculated as the percent change in the share price over each month.

So we will have one observation for each month.

df = yf.download('AMZN', '2000-1-1', '2024-1-1', interval='1mo')

prices = df['Close']

x_amazon = prices.pct_change()[1:] * 100

x_amazon.head()Output

The first observation is the monthly return (percent change) over January 2000, which was

x_amazon.iloc[0]Ticker

AMZN 6.679568

Name: 2000-02-01 00:00:00, dtype: float64Let’s turn the return observations into an array and histogram it.

fig, ax = plt.subplots()

ax.hist(x_amazon, bins=20)

ax.set_xlabel('monthly return (percent change)')

ax.set_ylabel('density')

plt.show()

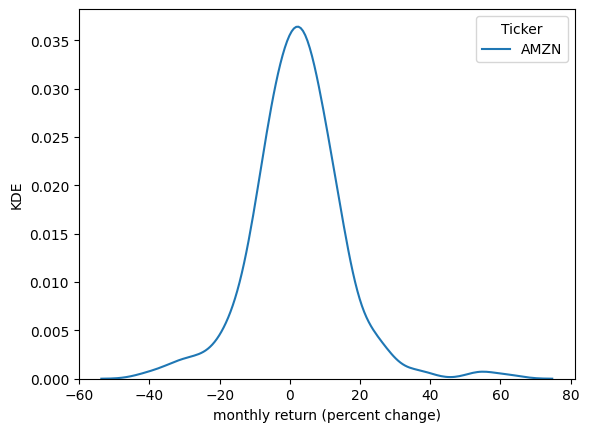

18.3.2.2Kernel density estimates¶

Kernel density estimates (KDE) provide a simple way to estimate and visualize the density of a distribution.

If you are not familiar with KDEs, you can think of them as a smoothed histogram.

Let’s have a look at a KDE formed from the Amazon return data.

fig, ax = plt.subplots()

sns.kdeplot(x_amazon, ax=ax)

ax.set_xlabel('monthly return (percent change)')

ax.set_ylabel('KDE')

plt.show()

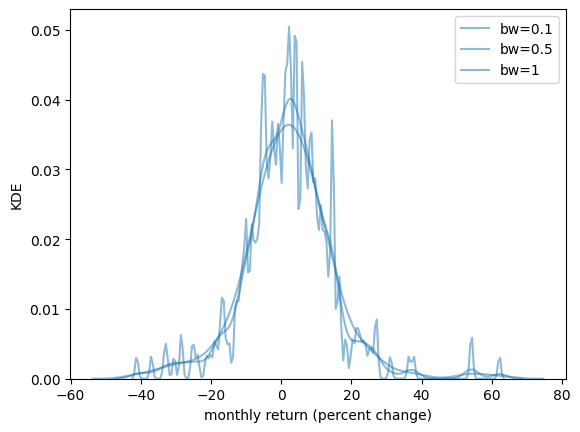

The smoothness of the KDE is dependent on how we choose the bandwidth.

fig, ax = plt.subplots()

sns.kdeplot(x_amazon, ax=ax, bw_adjust=0.1, alpha=0.5, label="bw=0.1")

sns.kdeplot(x_amazon, ax=ax, bw_adjust=0.5, alpha=0.5, label="bw=0.5")

sns.kdeplot(x_amazon, ax=ax, bw_adjust=1, alpha=0.5, label="bw=1")

ax.set_xlabel('monthly return (percent change)')

ax.set_ylabel('KDE')

plt.legend()

plt.show()

When we use a larger bandwidth, the KDE is smoother.

A suitable bandwidth is not too smooth (underfitting) or too wiggly (overfitting).

18.3.2.3Violin plots¶

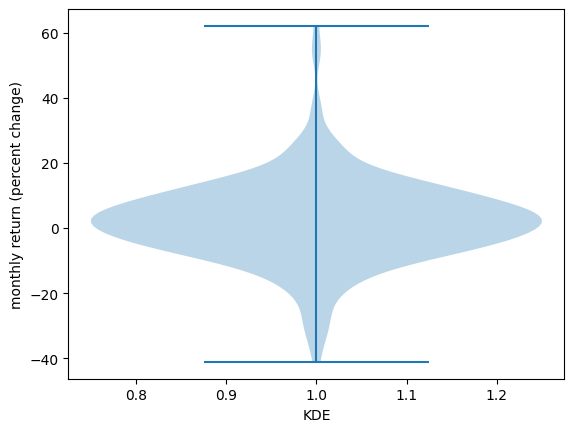

Another way to display an observed distribution is via a violin plot.

fig, ax = plt.subplots()

ax.violinplot(x_amazon)

ax.set_ylabel('monthly return (percent change)')

ax.set_xlabel('KDE')

plt.show()

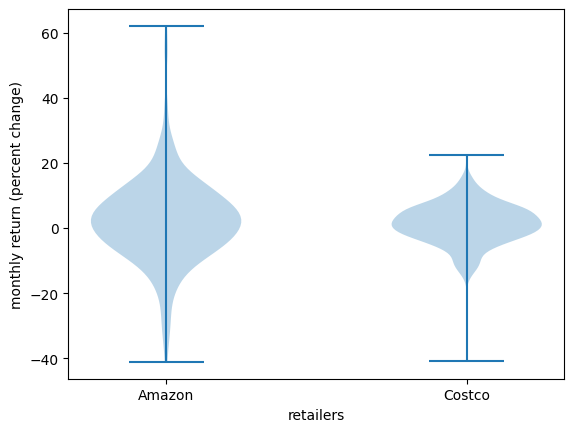

Violin plots are particularly useful when we want to compare different distributions.

For example, let’s compare the monthly returns on Amazon shares with the monthly return on Costco shares.

df = yf.download('COST', '2000-1-1', '2024-1-1', interval='1mo')

prices = df['Close']

x_costco = prices.pct_change()[1:] * 100Output

fig, ax = plt.subplots()

ax.violinplot([x_amazon['AMZN'], x_costco['COST']])

ax.set_ylabel('monthly return (percent change)')

ax.set_xlabel('retailers')

ax.set_xticks([1, 2])

ax.set_xticklabels(['Amazon', 'Costco'])

plt.show()

18.3.3Connection to probability distributions¶

Let’s discuss the connection between observed distributions and probability distributions.

Sometimes it’s helpful to imagine that an observed distribution is generated by a particular probability distribution.

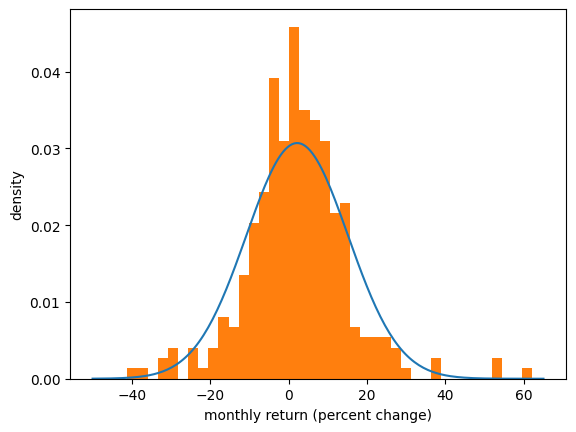

For example, we might look at the returns from Amazon above and imagine that they were generated by a normal distribution.

(Even though this is not true, it might be a helpful way to think about the data.)

Here we match a normal distribution to the Amazon monthly returns by setting the sample mean to the mean of the normal distribution and the sample variance equal to the variance.

Then we plot the density and the histogram.

μ = x_amazon.mean()

σ_squared = x_amazon.var()

σ = np.sqrt(σ_squared)

u = scipy.stats.norm(μ, σ)x_grid = np.linspace(-50, 65, 200)

fig, ax = plt.subplots()

ax.plot(x_grid, u.pdf(x_grid))

ax.hist(x_amazon, density=True, bins=40)

ax.set_xlabel('monthly return (percent change)')

ax.set_ylabel('density')

plt.show()

The match between the histogram and the density is not bad but also not very good.

One reason is that the normal distribution is not really a good fit for this observed data --- we will discuss this point again when we talk about heavy tailed distributions.

Of course, if the data really is generated by the normal distribution, then the fit will be better.

Let’s see this in action

- first we generate random draws from the normal distribution

- then we histogram them and compare with the density.

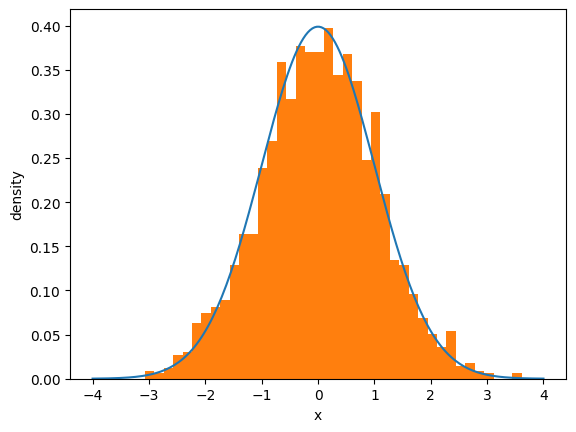

μ, σ = 0, 1

u = scipy.stats.norm(μ, σ)

N = 2000 # Number of observations

x_draws = u.rvs(N)

x_grid = np.linspace(-4, 4, 200)

fig, ax = plt.subplots()

ax.plot(x_grid, u.pdf(x_grid))

ax.hist(x_draws, density=True, bins=40)

ax.set_xlabel('x')

ax.set_ylabel('density')

plt.show()

Note that if you keep increasing , which is the number of observations, the fit will get better and better.

This convergence is a version of the “law of large numbers”, which we will discuss later.

Creative Commons License – This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International.